A Graphic Suicide Video Is Being Shared on TikTok

- 10th September 2020

- Evgeniy Anisimov

- Child Safety

Social media platform TikTok has hit the headlines yet again due to the circulation of a graphic video on its platform. After appearing on Facebook’s live-stream initially, the clip was quickly circulated on other social media platforms, including TikTok.

Just days before World Suicide Prevention Day (WSPD) on Thursday 10th September, the disturbing clip shows a man committing suicide live on camera from his home. TikTok has since come under fire for not doing enough to remove the video, nor doing it quickly enough.

33-year-old Ronnie McNutt live-streamed the harrowing footage from his home in Missouri on 31st August 2020. There are unconfirmed reports that Mr McNutt had Post Traumatic Stress Disorder and had recently broken up with his girlfriend. He was also an Army veteran, having served in the Iraq war.

TikTok appears to have been slow to remove the footage from their main-feed called ‘For You’. This feed shows users the new and emerging videos that you don’t need to search for and it is on here that people have inadvertently viewed the harrowing scene, including younger children.

Users have been warned others against watching a clip featuring a man, with a grey beard sitting at a desk in his home, due to the traumatic way in which it plays out. Unfortunately, there are reports that the video has been edited into other clips, such as cat videos, cruelly luring unsuspecting users into viewing it.

A close friend and colleague of Mr McNutt’s, Josh Steen, told Heavy that he reported the worrying state of Ronnie’s mental health to Facebook a few times after seeing the video while he was still alive. Facebook had simply informed Mr Steen at the time:

"This post will remain on Facebook because we only remove content that goes against our Community Standards. Our standards don’t allow things that encourage suicide or self-injury."

Facebook seems unavailable for comment since stating that they were aware of the video and had taken it down the same day. It took them two hours after his death to remove it, by which time it had already been shared.

TikTok has been in a race to remove the video and issued a statement to Business Insider, stating:

"Our systems have been automatically detecting and flagging these clips for violating our policies against content that displays, praises, glorifies, or promotes suicide. We are banning accounts that repeatedly try to upload clips, and we appreciate our community members who've reported content and warned others against watching, engaging, or sharing such videos on any platform out of respect for the person and their family."

Criticism about dealing with graphic content is not solely levelled at TikTok but also includes Facebook and YouTube, with past history showing a slow response to remove violent and graphic imagery and videos.

Facebook was heavily criticised for its handling of the Christchurch, NZ, shootings in 2019. The terrorist live-streamed his rampage, shooting over dozens of innocent victims, resulting in 51 deaths. Although Facebook boasts an algorithm that takes 12 seconds to remove harmful and graphic content, this particular video was still on the platform over seven weeks later.

A 12-year-old girl in the US live-streamed her distressing suicide on a site called Live.me and the video was subsequently shared onto the Facebook platform. It took Facebook over two weeks to remove the footage.

TikTok failed to notify the authorities of a live suicide for almost 3 hours in 2019. A 19-year-old vlogger informed his followers that he would be streaming ‘a special performance’ the following day. Two hundred and eighty of his followers watched him end his life on February 21st, 2019, at 3.23 pm.

TikTok only became aware after a further 90 minutes of live-streaming, 497 comments and 15 complaints. Their first actions were those of a PR strategy, notifying their PR team and not alerting authorities until almost 8 pm, four and a half hours after the young man’s suicide.

A 21 year-old-man in the US murdered a girl and posted photos of her body on Discord and Instagram. The images were hidden behind a filter, advising viewers of sensitive content, but readily available to those who opted to uncover the filter.

What is being done to stop the spread of such content?

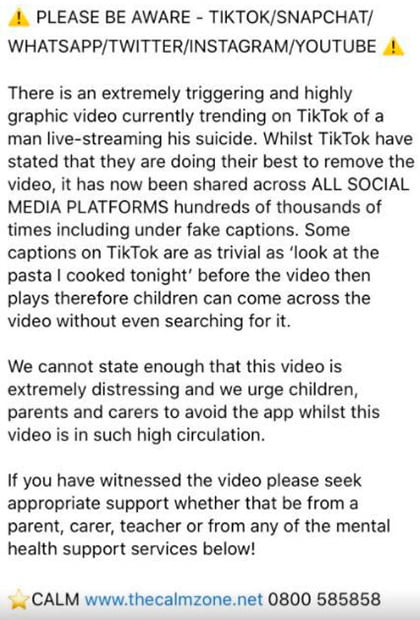

Firstly, parents have been advised to strictly supervise their child’s use on such platforms. TikTok has even stated that parents should not allow them to use their platform for a couple of days.

But is this enough when it is well documented that such videos can still be found weeks later?

The social media platforms have admitted that they need to be more vigilant. To manually review each live stream would be impossible, so they have automated systems to help flag any content that goes against the guidelines. Original forbidden videos can be recognised by a ‘tag’ and removed if shared, but it becomes much more difficult if an individual has filmed the content playing on a screen with their mobile phone and then reposts the recording, which will not have the same tag.

Banned content can be compared using automated tools and if any images match the upload from the banned clip, then uploading or resharing is refused. This can also be done with audio, recognising certain sounds, music, and conversation etc and matching them to prohibited content.

There are calls from hundreds of organisations and people for more effective regulation of all the social media platforms, such as using more aggressive settings, to weed out posts of hate, abuse, violence, racism, terrorism, inequality and so much more.

The horizon is forever changing in the world of social media.

Help is available for anyone suffering from mental health issues, or if someone you know is.

- SAMARITANS 24 hours a day, 356 days a year.

- MIND - for better mental health.

- THE CALM ZONE - Campaign Against Living Miserably, a leading movement against suicide.

- HEADS TOGETHER - tackling the stigma against mental health.

- PAPYRUS - prevention of young suicide.

- MOVEMBER - supporting men’s health and mental health.

CALM.... HEADS MIND PAPYRUS SAMARITANS MOVEMBER